The AI Readiness Reality Check

Data leaders across industries are feeling the pressure as AI continues to evolve. Data teams are told to “start piloting generative use cases” or “operationalize machine learning.” Budgets are finally there, executive buy-in is strong, and the pressure to deliver is high.

Then reality hits.

The models are ready. The ambition is real. But the data isn’t.

Despite more AI tools being available than ever, data teams are stuck at the starting line. Currently, less than a third of companies report that their data is AI-ready.

This is the paradox. AI has never been more accessible, yet the biggest blocker isn’t model complexity or computing power, it’s the data architecture. Data teams are pouring effort into integration, transformation and cleanup work that was never designed for real-time, AI-driven systems.

Business leaders continue to push forward, while the underlying data architecture is pulling back.

Before any organization can unlock AI’s potential, it has to make a shift: the old way of preparing data doesn’t work when the goal is intelligence in motion.

The Traditional AI Data Preparation Journey

Current Practices

For most organizations, the path to AI readiness follows a rigid, linear playbook:

- Audit and inventory existing data

- Establish data governance policies

- Improve data quality through deduplication and enrichment

- Centralize data into a warehouse or lake

- Enable API connectivity or middleware

- Apply labels or metadata for classification

- Build and maintain ETL pipelines to feed downstream systems

In theory, this process lays the foundation for analytics and AI, but in practice, it’s an extremely resource heavy project that can last years. Each stage introduces its own complexity, tooling overhead and personnel demands.

Most data teams aren’t short on skill, they’re short on time. Engineers are pulled into repetitive pipeline maintenance. Scientists are sidetracked by data cleanup. Architects are buried in integration tickets. Meanwhile, the actual AI work remains aspirational.

Instead of becoming intelligence engines, data teams become custodians of broken infrastructure. And by the time everything is connected, it’s no longer relevant.

Hidden Costs of the Traditional Approach

While the linear pipeline looks straightforward on a slide deck, it’s actually riddled with hidden costs including:

- Human capital: Skilled data professionals spend the majority of their time on prep work instead of AI delivery. According to the Pragmatic Institute, data teams spend 80% of their time on data preparation activities instead of analysis and innovation.

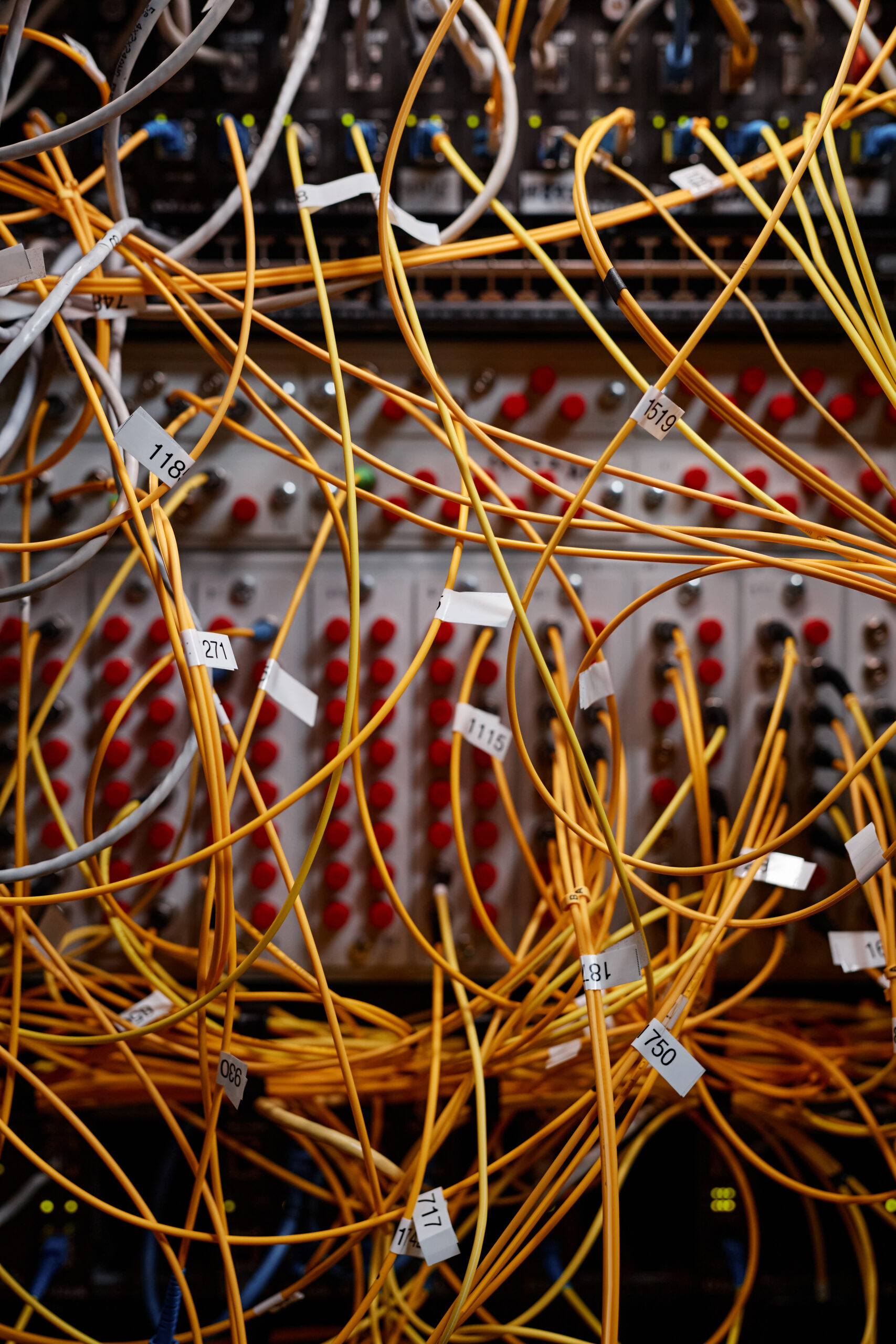

- Infrastructure complexity: Each integration, transformation and sync point adds friction. Every new connection is another potential failure point that has to be monitored and maintained . In organizations using 130 or more systems, this quickly becomes a tangled web of dependencies adding enormous strain to both IT and budget.

- Coverage gaps: Even after months of prep, not all data makes it through. Some systems are excluded because of integration challenges while governance slows access for others. On average, only 32% of organizational data is available for AI.

For AI to work, data needs to be accessible, reliable and current. The traditional approaches simply cannot deliver on all three.

Why Traditional Data Prep Creates AI Bottlenecks

Traditional pipelines were built for analytics, not AI. They centralize, cleanse and organize data for dashboards or scheduled reports. But AI doesn’t consume data the same way humans do. To work, AI needs immediate access to distributed, contextual and real-time data.

That’s where traditional prep begins to break down.

- The Centralization Trap: Data lakes and warehouses act as single points of failure. The average data freshness in a warehouse is 24 to 48 hours, too old for AI systems that need to operate in real-time. Governance requirements often slow ingestion further, creating even more latency.

- The Integration Web: Each new data source requires its own set of APIs, connectors or batch jobs. Companies spend massively on integration projects, yet most are not completed on time. API maintenance becomes unsustainable as systems increase.

- The Quality Paradox: Even when the data does arrive, it’s often degraded. ETL processes introduce delay, transformation errors and synchronization mismatches leading to a loss of data quality. And when multiple sources serve as partial truths, data cannot be trusted.

These problems build off each other. When the data is finally ready, it’s outdated. AI needs to act in real-time, and traditional systems weren’t built for speed so they can’t keep up.

Conclusion: Preparing for a New Data Foundation

The gap between AI ambition and data reality isn’t just a technical problem, it’s an architectural one. Traditional data prep methods weren’t built for real-time intelligence, and pushing them harder won’t close the gap. As more organizations realize this, the conversation is shifting from pipelines to platforms, from centralization to connection.

Check your AI-readiness here.

If you’re ready to explore how an Actionable Virtual Data Layer (AVDL) can provide a foundation for making data AI-ready, fast, book a meeting with me here.